We recently upgraded successfully our product to NAV2017. In fact, one day after the actual availability of the Belgian download, we had our upgraded product ready.

As promised, here is a short post about the challenges we faced during the upgrade. Probably not all challenges are represented here .. and I might have had issues that you won’t have :-). Keep that in mind.

The Scope

First step for me always is an “upgrade” in the pure sense of the word. No redesign, no refactoring, no new features. Just the customized code transferred to the new version of NAV. That’s what this post is about. Next step (and release) for us is going to be incorporating the new features that Microsoft brings us.

Just to give you a feeling: this product of ours is quite big:

- 616 changed default objects – many tables

- 2845 new objects – which doesn’t really influence the merge process, though, these objects might call out to default (redesigned) code or objects ..

- +900 hooks into code – changed business logic, change of default behaviour of NAV

I was able to do this upgrade in less than 12 hours .. which is 4 times the time spent compared to last year. This is purely because of the significant refactoring that Microsoft has been doing. In numbers, this is what they did:

- 437 new objects

- 96 deleted objects

- 3286 changed objects

Do your own math ;-). The 96 deleted objects alone indicate a serious refactoring effort. And refactoring usually means: upgrade challenges.

How I did my upgrade

I upgraded our product in these steps:

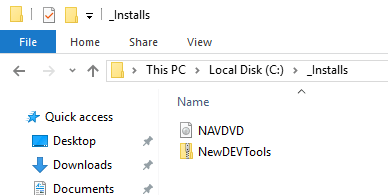

- I created a new VM with the final release of Microsoft Dynamics NAV 2017.

- I used my PowerShell scripts which you can find on my github. Namely, the ones you can find in the scripts folder under “NAV Upgrades“. The thing is, the script “UpgradeDatabase.ps1” is going to do a full upgrade. I didn’t want to do this, so I stripped the script a bit, as I only wanted to merge, because I expected quite some conflicts, just because Microsoft refactored quite a bit.. . So basically, I only executed the ‘Merge-NAVUpgradeObjects’ part. That script gave me a folder with conflicts and merged text files.. .

- And indeed: quite some conflicts. This was expected. We will go into the issues later on, but what I did to solve the conflicts is two things:

- I solved the majority just in the txt files with VSCode. Editing code in text files with VSCode makes so much sense – read below why.

- I created a database with all my merged objects to solve the more complex conflicts, like the completely refactored codeunit 80 and 90. It gives you the “go to definition” stuff and such .. .

- Compile code, fix errors and create a fob: Create a full txt file and import it in an environment where you can compile all, and create a fob. I had a few compile errors, but these were easy to solve.

- Perform the database upgrade, including data

- This one involves the upgrade toolkit. While testing this, it was clear that the default toolkit needed some work, as well as I had to include some of my own tables that became redundant thanks to the code redesign.

- Thanks to the “Start-NAVUpgradeDatabase” function as part of my scripts, this was quite easy. One tip: always fix the first error, then re-do the entire conversion, because the code in the upgrade toolkit isn’t ready for starting the data conversion multiple times .. .

After this final step, we had an upgraded database, including all upgrade code and upgrade toolkit for both the default application as my product.

Again, this doesn’t involve any significant code re-design to fit the new features. That’s what we will be doing from now on.

So, let’s dive into the things that are worth mentioning (in my book (no, I’m not writing a book …)).

Refactored objects

During solving the conflicts, you run into objects that are quite refactored by Microsoft. I assume to know why they are doing this. Part of the reason must be facilitating more events. This needs to be done in refactored code .. . So let’s be clear: I totally support the fact that Microsoft is redesigning the code .. It’s a step we must take, although it doesn’t make the upgrade-life much easier.. .

These are a few points that I was struggling with.. :

COD414 and COD415 Release Sales/Purchase Document

OnRun moved to “Code” trigger. For the rest quite the same – so merging is quite fine .. . Though, I would have expected a bigger change. While you’re already changing the main trigger that will cause a conflict anyway – why not just refactor the whole thing. This is not really refactoring, this is moving and causing a conflict without any benefit…

COD448 – Job Queue Dispatcher

The Job Queue has totally changed. This is not a surprise, as it is running on a completely different platform, with a completely different concept, having multiple threads (workers?) waiting for the scheduler to drop something on its queue.. . I decided to ignore all my changes to the job queue, and start fresh. This will be something to look at after the upgrade.

COD5982 – Service-Post+Print

Microsoft removed a function “PrintReport” and put it on record-level, which means in this case: 4 places. I had one hook in this function .. and now it had to be placed on 4 places.. . This is the only place where “hooks” didn’t really serve me well as I don’t want to place one hook function in four places.. .

The redesign does make sense, though, so I’m not complaining. I created new hooks, and off we go :-).

COD82 – Sales-Post + Print

Similar changes (I like the consistency). Again, the PrintReport was moved to rec-level, which didn’t make my hook-moving going swell.. but I survived :-).

COD80:

Must be the most refactored codeunit together with COD90. the code seems to still be all there in CU80, only in (local) functions. So this was really just a matter of moving my hooks to the right local function. You could see that the code structure was still quite the same, so it wasn’t that difficult to find out where the hook had to go.. .

A few other things caught my mind though, that wasn’t always that easy ..

- Changed Text constants: all text constants have a decent name now. Instead of something like Text30, it actually describes the text.. . This does mean a lot more code changes, and some more conflicts.. .

- Changed Var names: a little more difficult. In some places, I noticed changes like “GenJnlLine2” renamed to “GenJnlLine” or “SalesLine” changed to “SalesOrderLine”. It is refactoring, it is making it more readable .. but in some cases, you really have to keep your head in the game to solve conflicts .. .

- From Temp to no-temp (and vica versa). This was the hardest thing to do. And I still have to test everything to see if I merged correctly. The thing is: I have the feeling they completely changed the “Preview Posting” thing by doing stuff in Temp Tables and such. This meant that all of a sudden, I was getting Temp variabels in my hooks .. . So I really had to pay attention what to do in those scenarios.. .

I handled CU80 and CU90 manually in the database, and did all changes I did manually without automatic merge. It does make sense, because I could navigate through code to find out what to do in all 24 points I hooked into this codeunit. Tests will still have to show whether I did a good job or not ;-).

TAB37:

VAT calculation has moved to VATAmountLine table to “UpdateLines” function. Moving this function means moving your hook to another hook codeunit .. . It does make more sense, but in my book, this is one of the more challenging things to do ;-).

ApprovalsMgt Turned into Variant Façade

So, code like:

ApprovalsMgmt.DeleteApprovalEntry(DATABASE::”Sales Header”,”Document Type”,”No.”);

Needs to be changed to:

ApprovalsMgmt.DeleteApprovalEntry(SalesHeader);

We used this kind of code quite a lot in our product.. . Some work, but the refactoring makes so much sense. Love it!

Item Category

Default fields moved to “Item Template” .. . This is quite a big change, because we added 6 extra default fields .. and created some functionality on top of this. These default fields being moved to item template, meant we need to rethink our functionality as well .. . This is for after the upgrade, as in my opinion, “rethinking functionality” should not be part of the upgrade.

Pages

Pages is not easy. This is a refactoring-bit that I don’t like. Microsoft tends to put stuff on pages. Like:

- ShowMandatory – added in NAV2015, as a property on a field.

![:(]()

- Tooltips – Added in NAV2017: again a page-field-level property to add multi language tooltips

![:(]()

- ApplicationArea – Added in NAV2017: again a page-field-level property to assign fields to certain application areas (to be able simplify (and hide fields on) pages for users).

I absolutely hate this design principle from the bottom of my heart. It’s unreadable, unmaintainable, unextendable, .. .

So if you would have changed or deleted any of these fields on pages, you would have conflicts. You get things like this:

In my case: LOADS of them.. . Thing is: also on moved fields you get this :(. I had conflicts on about all fields that we changed – just because of the design of these new features (page-field-level…).

This was just one example. Other very recurring conflicts were:

- Code Modifications

- Changed properties, like

- Promoted

- PromotedCategory

- PromotedActionCategoryML

- CaptionML (character problems)

Furthermore, on a lot of pages, they don’t have closing semi-colon in code .. which makes it difficult to compile after you (automatically) merged code after it. Here is an example of Page30

A few numbers:

- I had 13 conflicts in the Customer Card,

- 57 out of 88 conflicting objects were pages!

- There was an average of 5 conflicts per page .. .

- Worst ones:

- Sales Order / Quote (and any document pages for that matter)

- Customer Card / List

- Item Card / List

I was able to handle them all in just textfiles.. . But no advise here – you’ll just have to work your way through them :(.

Deleted objects

In my case (BE upgrade from 2016 CU9 to 2017), a total of 96 objects were deleted. The chance you have references, table relations or whatever to any of these objects is quite big. This is something that you’ll need to handle after the upgrade while compiling your code. Very dependent of what and how you’re using these objects.

Why there are so many deleted objects. Well .. So much has been refactored .. like:

– reworked notifications

– reworked CRM wizards

– reworked job queue

– …

In my case, only one conflict here. Namely the “Credit limit check” has seriously changed. It works with Notifications now. We tapped into it, so we needed to revise the complete solution (which ended up in removing it :-))

Irregularities

What I was really surprised about is some irregularities in the code of Microsoft. Sometimes, this was really confusing. One example that comes to mind is the variable “RowIsNotText” in page 46. This variable is used to handle the “Enabled” and “Editable” property of some fields. You would expect this variable to exist in all similar “subforms”. Well – no. In fact, in all other subforms, there was the similar but completely opposite variable “RowIsText”. Be careful for this!

Upgrade Toolkit

Once I had compiled objects (all merged, imported and compilation errors solved), I started to work on my data upgrade. Of course I used the upgrade toolkit that comes with the product.

I had two issues in this.

The upgrade Toolkit contains errors in function: UpdateItemCategoryPostingGroups. It seems that a temp table in combination with an AutoIncrement doesn’t go well together. When you have multiple Item Categories, it failed on this function from the second entry .. . I did a dirty fix on this, doing this (just making sure the table is empty, so that the OnInsert code is being able to run.

Another issue was that Microsoft didn’t do a “INSERT(TRUE)” for the UpdateSMTPMailSetupEncryptPassword. The OnInsert needs to run, but Microsoft had put just “INSERT”, not “INSERT(TRUE”, which seemed like a bug (no GUID was created). We changed it to INSERT(TRUE).

Solving conflicts with VSCode

Just for the sake to get myself familiar with VSCode, I decided to handle conflicts in this editor. Sure, it’s not code, it’s just TXT files .. but why not :-). It is a code editor .. and text file or not – I’m editing code.

Here is how it looked like:

A few things that I really liked about this approach.

- Github Integration: I saved all to github. This made it possible for me to see what I did to solve a conflict. Always good to be able to review everything.

- File explorer / navigate between files. All files were just there. Very easy.

- Open window on the side (CTRL + Enter). As you can see on the screenshot, I could show two files at once. Because of the “big” conflict files, this was quite convenient. Also to search the right spot is just a copy/past-in-search kind of thing without too much switching screens. Very easy.

- Easy search (select + CTRL+F) and find previous (shift+Enter). As said, it behaves like a code editor. This was one of the places this showed really well. Like when you’re looking for conflicting control ids. Just select the id, press CTRL+F, automatically it will search for what you have selected, and all matches get highlighted.

![]()

- Smart carriage return: it will end up on the place you want it to be – like you expect from a code editor, but not from a text editor.

I will do this every time from now on :-).

Conclusion

I was happy to be able to have an upgrade in only 12 hours. Taking into account that so much was refactored, plus the most conflicts I had to solve in over 3 years .. it was still “just” 12 hours. Still, it’s 4 times the time that I spent in my previous upgrade .. . I hope this doesn’t mean that someone who did 1 month last time, will spend 4 months ;-).

Microsoft has given us the tools to do this as efficient as possible. Yes, I’m talking about PowerShell. My scripts on top of these building blocks has helped me tremendously again .. I can only recommend them for you.

“Hooks” helped me a lot again this time as well. Putting them into the refactored code was quite easy. I spent more time on some pages then I did on Codeunit 80.

So .. good luck, hope this helps you a bit.

.. and above all .. join!

.. and above all .. join!